YouTube Rolls Out A Disclosure Tool for Generative AI Content

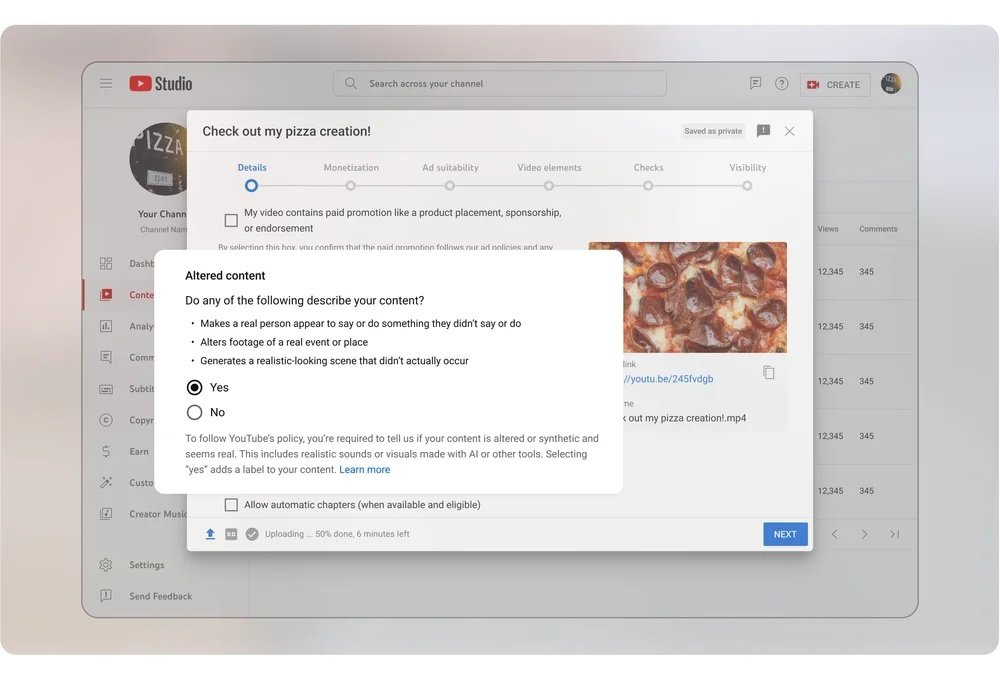

YouTube has started rolling out a disclosure tool for altered or synthetic content. Creators must use this tool to inform viewers about realistic content created, altered, or synthesized using generative AI. This includes likenesses of realistic persons, altered footage of real events or places, and generating realistic scenes.

However, creators don’t need to use the tool to disclose generative AI use for productivity purposes, such as script generation or generating content ideas, or when the synthetic media is unrealistic and/or the changes are inconsequential.

These labels will appear in the video descriptions and sometimes on the videos themselves for videos focused on sensitive topics like health, news, elections, or finance. YouTube will also automatically add labels when creators don’t disclose videos. Eventually, enforcement measures will be added when creators fail to disclose.

Why It Matters: YouTube’s approach to disclosure tools is intriguing. It only requires creators to use them for specific scenarios, which is clear but also subject to interpretation, leading to scenarios where creators don’t disclose when they should.

One positive aspect is that YouTube’s algorithm won’t use disclosure labels in determining recommendations or rankings, which should give creators some peace of mind and lead more of them to use the tool when appropriate.